This is the fifth post in my Containerizing NServiceBus Endpoints with Docker Series

Part 1: Introduction/Readying your System/Local Azure Resources

Part 2: Live Azure Resources

Part 3: Dockerize!

Part 4: Docker Compose

Part 5: Containerizing Sql Server and switching to Sql Persistence

In Part 4, we looked at how using docker compose centralized the orchestration of the containers for our application. We learned about .yml files how to use docker compose up.

If you recall from Part 1, one of my goals was to offload the infrastructure required by NServiceBus's persistence and transport needs to Azure so I could focus on learning Docker and containerizing the solution. Now that I've made some head-way, I'll re-focus on using Sql Persistence instead of Azure Storage Persistence for NServiceBus persistence.

This post will cover:

- changes to the solution

- switching NServiceBus persistence from using Azure Storage Persistence to Sql Persistence

- adding an event

- containerizing Sql Server

- coordinating the starting and stopping of the application using PowerShell,

docker composeanddocker run.

Let's get started!

Changes to the Solution

Changing From Azure Storage Persistence to Sql Persistence

To begin with, I've added a new branch to the existing solution named SqlServerContainerAndPersistence. Please go pull this new branch now.

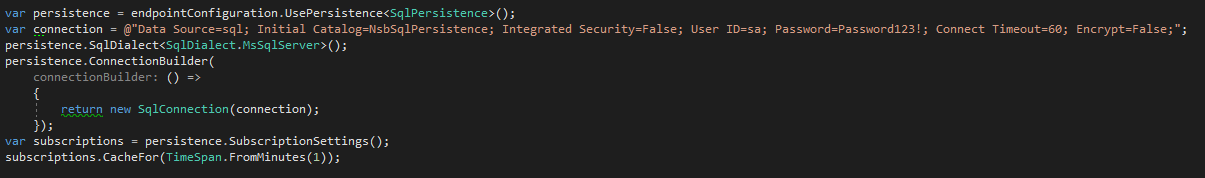

Opening the solution up you'll see the Global.asax and EndpointConfig.cs files have been updated to use SqlPersistence. Here is an excerpt of that config code from both files:

The only difference in the two files is in the EndpointConfig.cs file which makes this call:

SqlHelper.EnsureDatabaseExists(connection);

this configuration code is taken from Particular's Simple SQL Persistence Usage example

which takes care of checking if the database exists, and if it doesn't, creates the database with the correct schema to set up Sql Persistence. The supporting NuGet packages have also been added that allow the usage of Sql Server as the persistence mechanism.

because of the order in which the containers are started, with the MVC container being last, I opted to check for the existence of the database from the endpoint project. This is because the endpoint container is started before the mvc container, so we only need to make the check once in this specific case.

Adding an Event

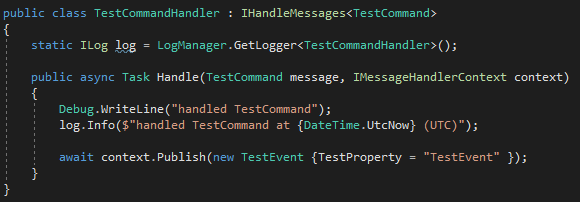

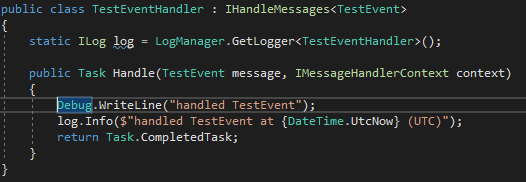

To illustrate how splitting the needs of NServiceBus transport (currently being handled by Azure Storage Queues) and NServiceBus Persistence (Sql Persistence) impacts the technical artifacts created by each respective technology, I've opted to add a new event, TestEvent, that is published at the end of TestCommandHandler, and handled in new handler, TestEventHandler.

Adding TestEvent requires a subscription to be registered in the EndpointConfig.cs class for that event, which is handled in a same endpoint from which it is published. Using NServiceBus's Message Routing, I register a publisher of type TestEvent for the publishing endpoint, "NSB6SelfHostedClientMVC.Handlers":

var routingSettings = transportSettings.Routing();

routingSettings.RegisterPublisher(typeof(NSB6SelfHostedClientMVC.Messages.Events.TestEvent), "NSB6SelfHostedClientMVC.Handlers");

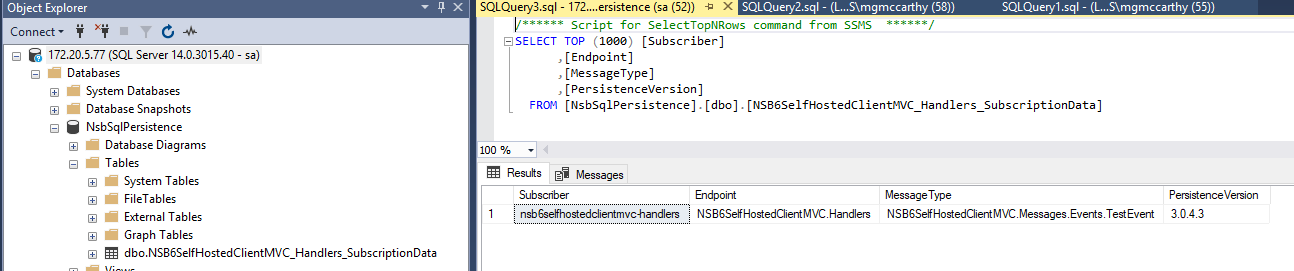

I'll demonstrate later how this message routing turns into a database entry in the table created by Sql Persistence.

Now that we have Sql Persistence set up, we need to find a way to containerize a Sql Server instance and have that instance available to NServiceBus to use for its persistence needs.

Containerizing Sql Server

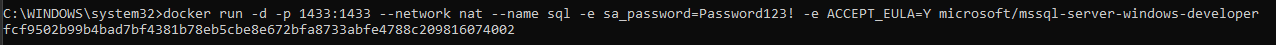

To containerize Sql Server, I choose to use the microsoft/mssql-server-windows-developer image on DockerHub which pretty much gave me a running head start. After reading through some specifics, I settled on running a container from that image like follows:

docker run -d -p 1433:1433 --network nat --name sql -e sa_password=Password123! -e ACCEPT_EULA=Y microsoft/mssql-server-windows-developer

That's a good amount of parameters. Let's examine them one at a time:

-d: run the container in detached mode-p: we've seen this before with the mvc container when we needed our IIS server to be reachable on port 80. In that case, we're dealing with Sql Server, so we want to run on port 1443.--network: this is a new one, and I'll touch on the significance of this later in this post. Let's just say it helps to have all your containers on the same network when you're trying to make them communicate ;)--name: a human readable name for the running container-e: sa_password=Password123!: I know, what a great password! The-eparameter here stands for environment variables. It's possible to pass in any number of name/value pairs here governed by the image you're using to run the container.-e ACCEPT_EULA=Y: confirms acceptance of the end user licensing agreement found here.

Let's go ahead and run this image in a container. Execute:

docker run -d -p 1433:1433 --network nat --name sql -e sa_password=Password123! -e ACCEPT_EULA=Y microsoft/mssql-server-windows-developer

After running the command you should see the id of the container returned to the console:

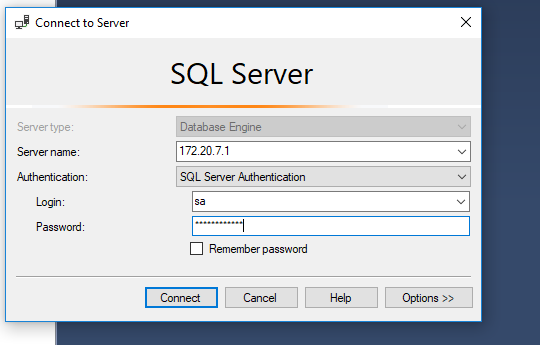

Get the IP address of the running container by executing:

docker inspect --format="{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}" sql

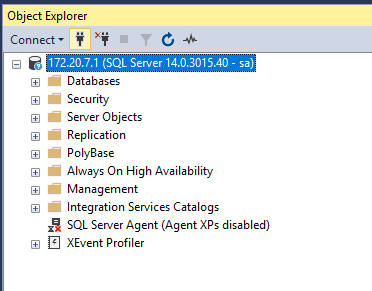

and fire up Sql Server Management Studio. Once SSMS opens, paste in the IP and the password used when starting the container. You should be able to connect to the sql server instance in the container.

to stop the container, issue:

docker stop sql

to remove the image, issue:

docker rm sql

So far, we've changed persistence and containerized a sql server instance. Now let's coordinate these docker containers in order to run our application.

Docker Compose

My first attempt at using docker compose for coordinating all three containers ended up failing, so if you're not interested in reading about why it failed and would rather jump forward to the part that did work, proceed to that section now

To coordinate our new sql server container with the rest of our application, we need to return to our docker-compose.yml file and start the sql server container first, the endpoint container 2nd, and the mvc container last.

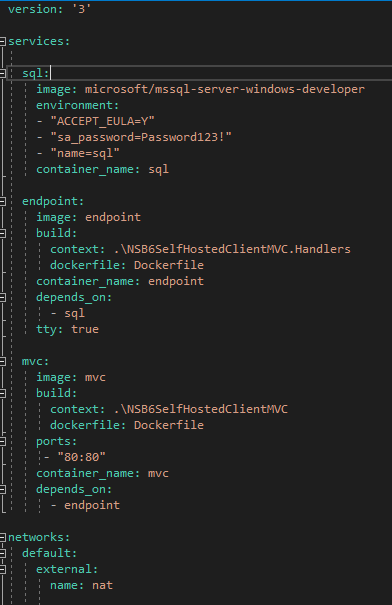

Here is what the .yml file looked like at the point I tried to take this approach:

There are some new items added to the yml file:

networks: I alluded to this in the previous section when talking about passing innatfor the--networkparameter.natis the default network created in which your containers will run if you do not specify other network options in Docker. Since I need all three of my containers to talk to each other, I specify the that all three containers use the same network name (nat) at the end of the yml file.depends_on: this allows containers to be "ordered" for startup. In this case, I need the sql server container created first so the endpoint container can use it for NServiceBus persistence. I need the mvc container to start last, because the endpoint container runs theSqlHelper.EnsureDatabaseExists(connection);check to build the persistence database and schema.

More information on the

natnetwork can be found here and more information on Docker networking (which is a large subject unto itself) can be found here and here.

For the added sql section, you can see that we're passing in the same information we passed to docker run when running the image directly from the console. In the .yml file, we're saying we want to use the microsoft/mssql-server-windows-developer image directly, and then passing in all of the variables using the environment sub-node as well as naming the container sql

With the new .yml file in place, I dropped to the command line and issued:

docker- compose up -d

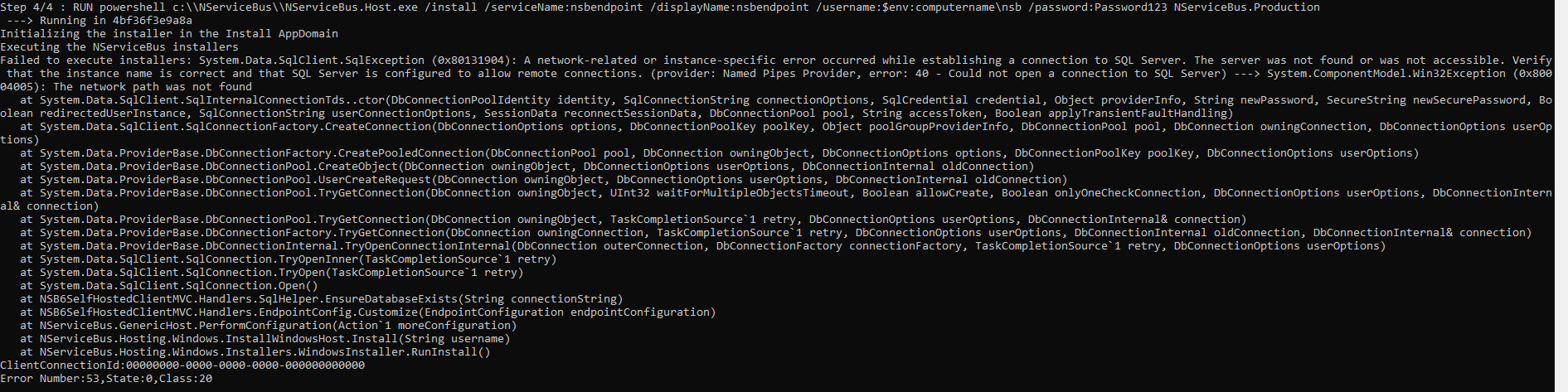

and received the following exception:

The failure was within the setup of NServiceBus.Host in the endpoint image. Regardless of whether the NServiceBus.Host is started (default behavior) or not after it's installed, the host will attempt to create all the resources it needs for NServiceBus's transport and persistence. In my case, since the NServiceBus.Host was being installed as part of the image, but I needed a running sql server container in order for the NServiceBus.Host to create a database and schema for Sql Persistence, and that container was not running, I was receiving this exception.

Since docker-compose builds all the images first and THEN start all containers, and since there seemed to be no way to run a docker container before building the other images by using the .yml file, I could not find a way to get the running sql server container I needed.

Time for plan B.

Container Coordination Using PowerShell and Docker Compose

I needed a way to execute docker run on the microsoft/mssql-server-windows-developer image to get a running sql server container before executing docker-compose up -d, which would build both my endpoint and mvc images, and then start running containers for each.

This sounds like a job for PowerShell!

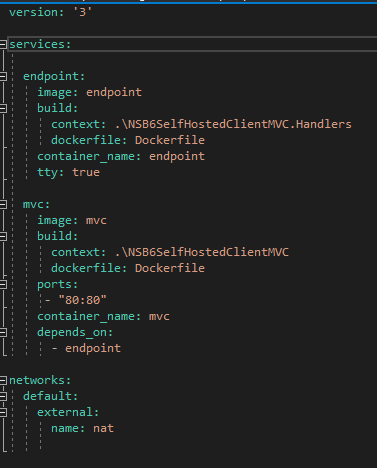

With this new approach, let's revisit the .yml file:

The .yml file looks almost exactly the same as it did in Part 4, except for the addition of the networks node, which I covered in the Docker Compose section.

There are two powershell scripts in the solution, StartApplication.ps1 and StopApplication.ps1.

StartApplication.ps1 uses docker run to directly run a container from the microsoft/mssql-server-windows-developer image, passing in all the parameters we did when initially running it near the beginning of this post. It then calls docker-compose up -d which builds the images and starts the containers specified in our .yml file.

docker run -d -p 1433:1433 --network nat --name sql -e sa_password=Password123! -e ACCEPT_EULA=Y microsoft/mssql-server-windows-developer

docker-compose up -d

$sqlContainerIp = docker inspect --format="{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}" sql

$mvcContainerIp = docker inspect --format="{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}" mvc

Write-Host –NoNewLine "sql container ip:" $sqlContainerIp

Write-Host ""

Write-Host –NoNewLine "mvc container ip:" $mvcContainerIp

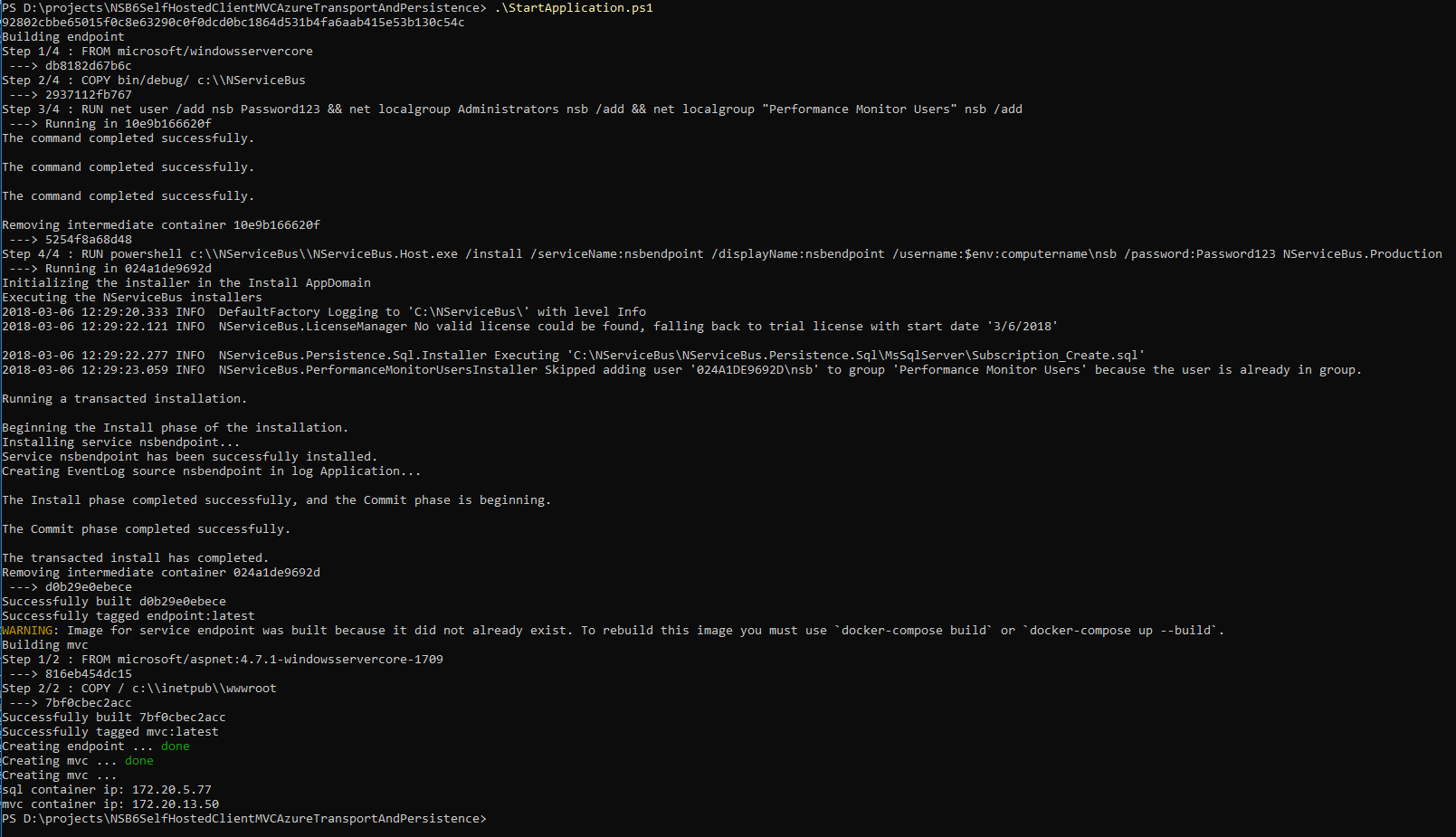

Executing .\StartApplication.ps1 should result in output similar to this:

In the first line, the id of the sql server container is returned to the console, then the PowerShell script executes docker-compose up -d which creates the endpoint and mvc images and then starts those containers.

The last two lines contain the IP address of both the mvc container as well as the sql container so you don't have to execute this for each container:

docker inspect --format="{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}" [CONTAINER ID]

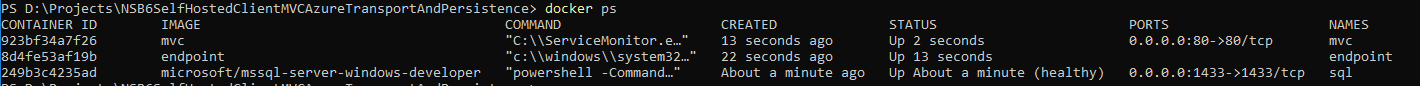

After the script is done executing, you can now see all three running containers by issuing

docker ps:

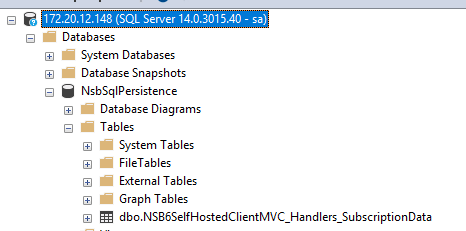

You can see the sql server persistence table created by the endpoint container by using the sql container ip output at the end of the .\StartApplication.ps1 script and logging into SSMS:

Looking the the database, you'll see the subscription entry in the dbo.NSB6SelfHostedClientMVC_Handlers_SubscriptionData table that contains the message to which the NSB6SelfHostedClientMVC.Handlers endpoint is subscribed:

You can test the mvc container is reachable and sends a command that is successfully handled by the endpoint container using the mvc container ip output at the end of the .\StartApplication.ps1 script, opening your browser and pasting the IP address into the address bar.

Once you see this generic MVC home page, execute:

docker exec -it endpoint cmd

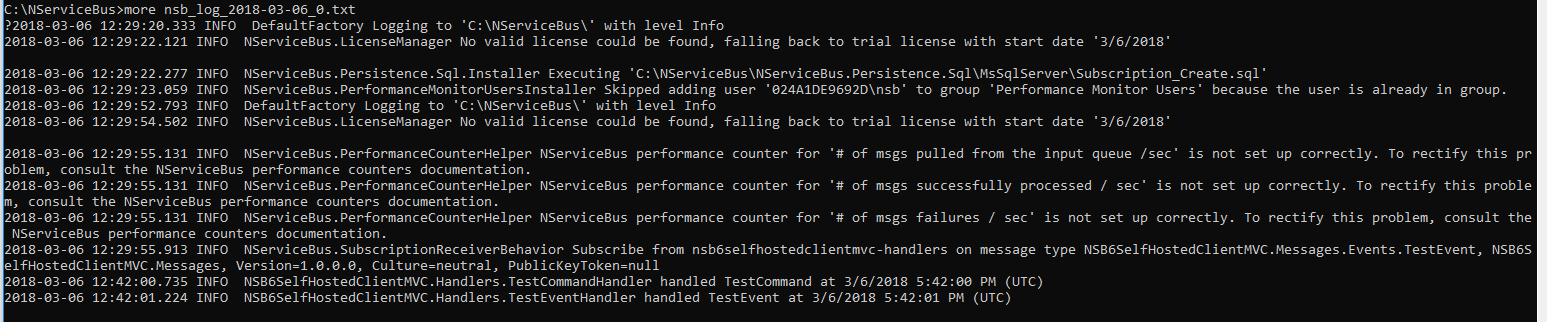

to put yourself on the endpoint container's command line. cd to the NServiceBus directory then issue a dir. You'll see the nsb log file that will have the output of the handlers that have handled their messages.

Issue the more command filling in the name of your nsb log file and the end of the log file should contain output like this:

The bottom two lines show that TestCommand was handled as well as TestEvent.

Cleaning Up

Now that we've made it so easy to start our application, it would be a shame to stop there. Let's make it easy to stop it as well.

Looking at StopApplication.ps1:

docker-compose down

docker stop sql

docker rm sql

docker rmi mvc

docker rmi endpoint

We can see the use of docker-compose down. This command does the exact opposite of, you guessed it, docker-compose up. This will "undo" all the work done by docker-compose up with one caveat... if images were created as a result of invoking docker-compose up, those images will not be deleted when invoking docker-compose down.

In addition to docker-compose down, you can also see docker commands to stop the sql container then remove the sql image. Remember, the sql container is not included in the .yml file on which docker-compose relies to do its magic, it's started using docker run in StartApplicaiton.ps1, so we need to stop this container and delete this image by hand.

Because docker-compose down does not remove images that were created as part of docker-compose up, we need to manually remove both the mvc and endpoint images as well.

Again, using user-friendly names for your containers helps make cleanup like this much easier

Wrap Up

In this post, we migrated NServiceBus persistence away from Azure Storage Persistence to Sql Persistence, containerized a sql server instance, and used PowerShell to coordinate docker run with docker-compose to create three containers which run our NServiceBus application.

Future posts in this series might include migrating to MSMQ as the NServiceBus transport (when it becomes available as part of the stable microsoft/aspnet:4.7.1-windowsservercore image on DockerHub) and/or deploying this application to the cloud in with Docker Swarm or Kubernetes.